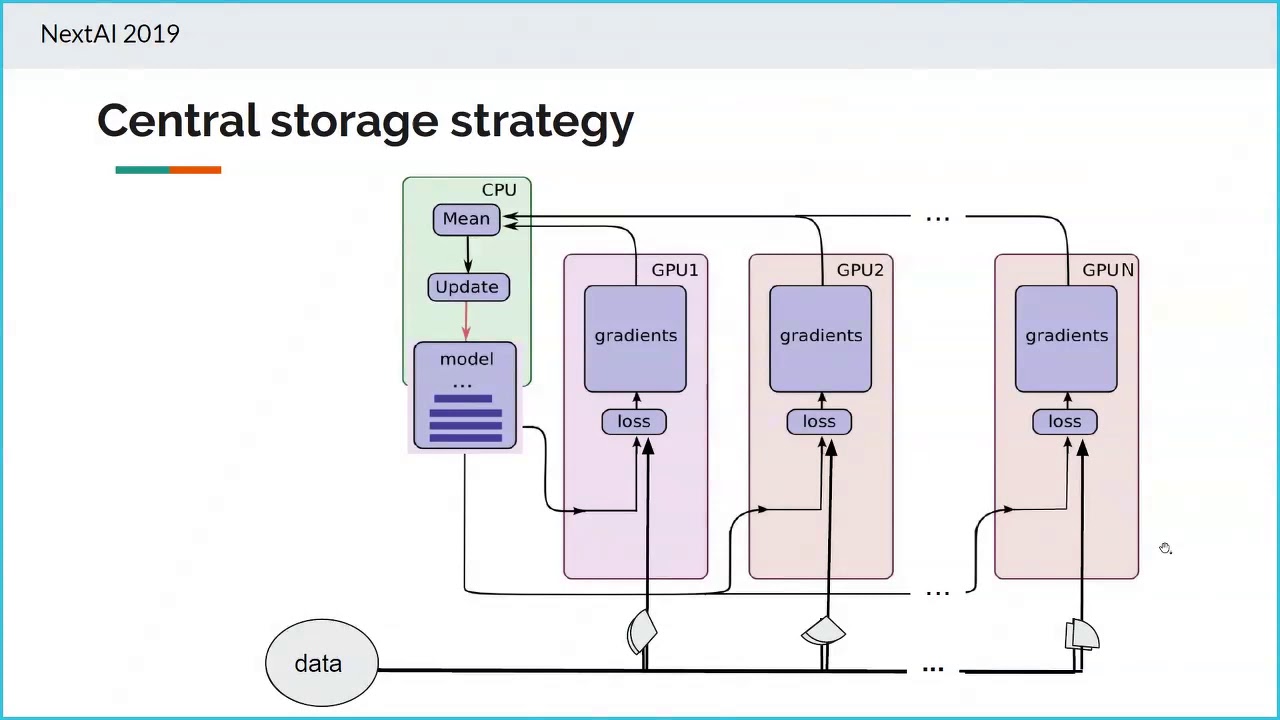

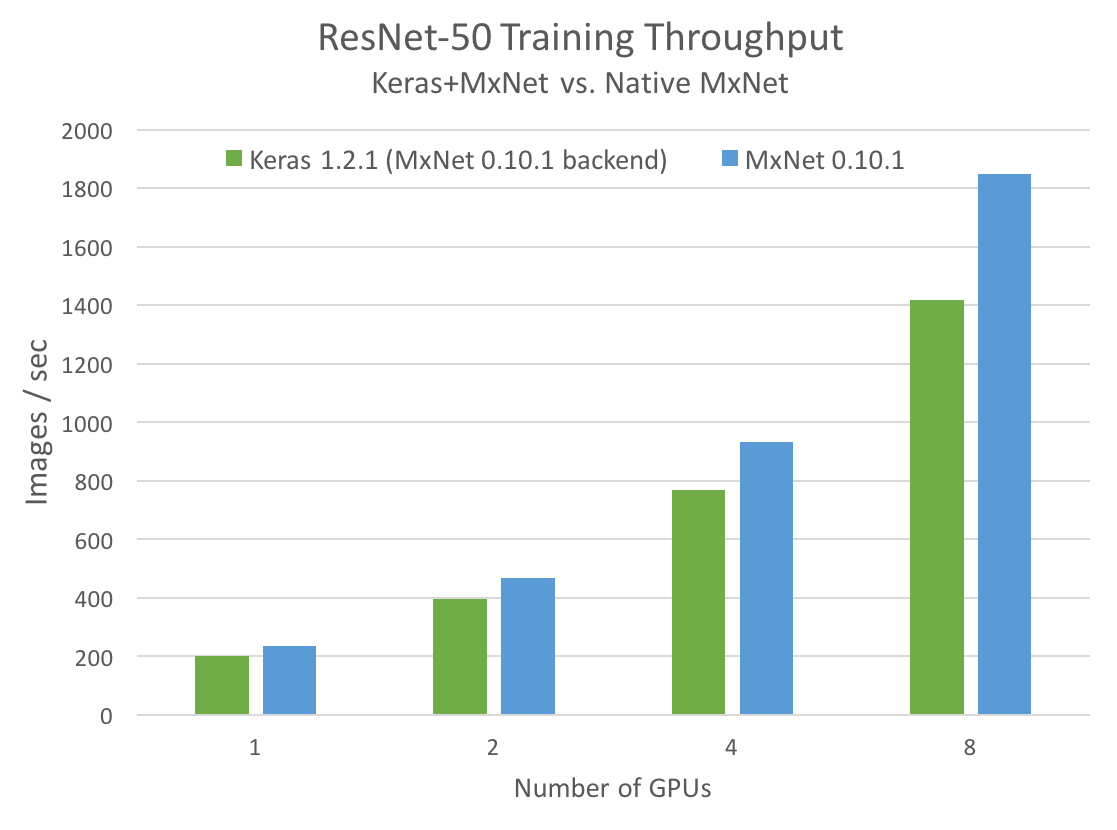

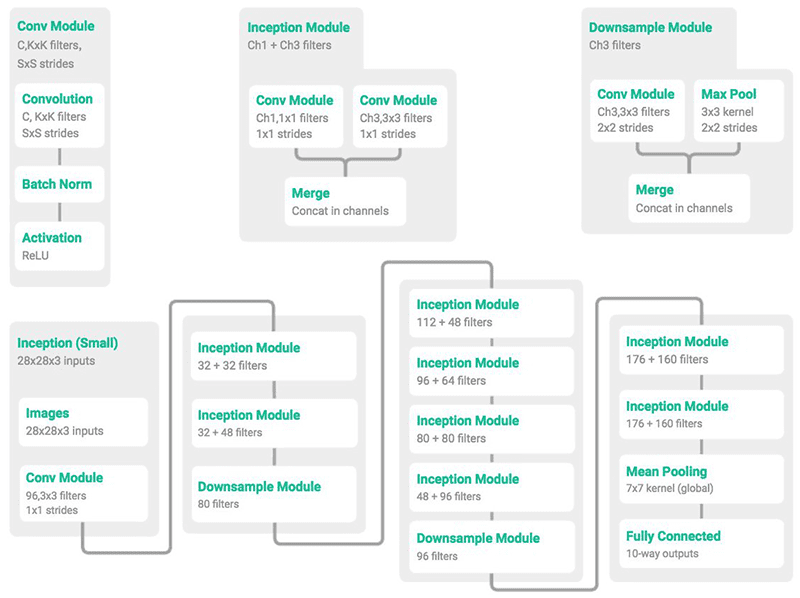

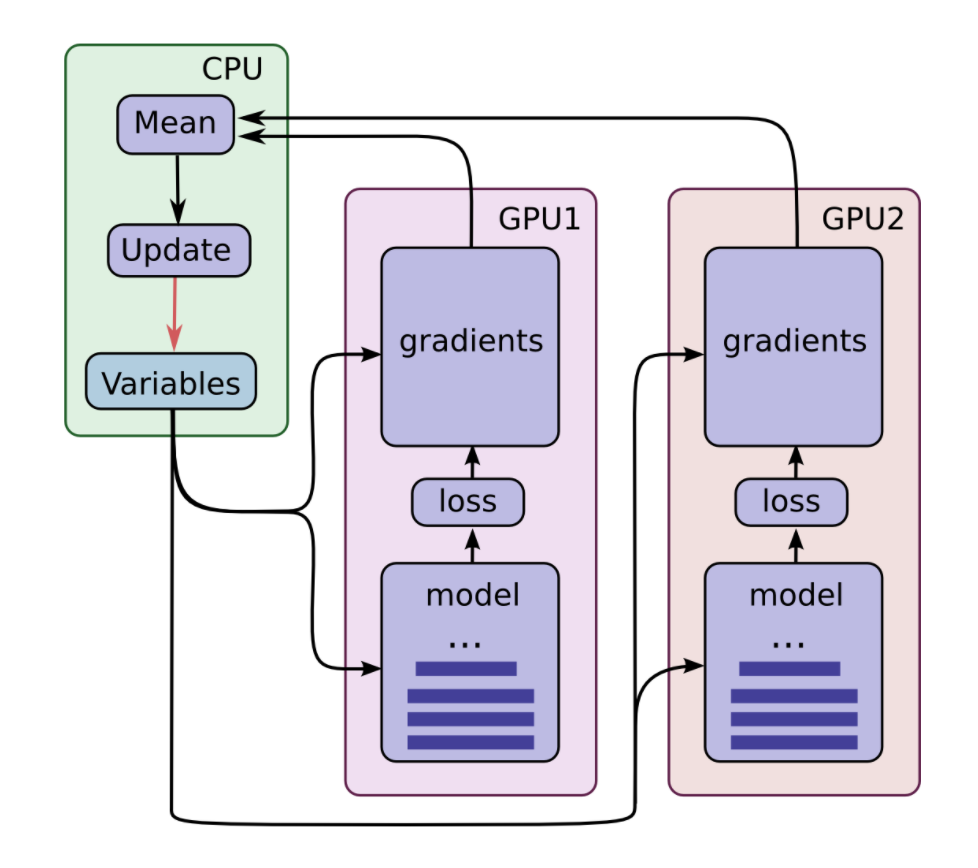

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

Towards Efficient Multi-GPU Training in Keras with TensorFlow | by Bohumír Zámečník | Rossum | Medium

Installing TensorFlow 2.1.0 with Keras 2.2.4 for CPU on Windows 10 with Anaconda 5.2.0 for Python 3.6.5 | James D. McCaffrey

Multi-Class Classification Tutorial with the Keras Deep Learning Library - MachineLearningMastery.com

Installing TensorFlow 2.1.0 with Keras 2.2.4 for CPU on Windows 10 with Anaconda 5.2.0 for Python 3.6.5 | James D. McCaffrey

One GPU is utilized 100% and Second GPU utilization is 0% - CUDA Programming and Performance - NVIDIA Developer Forums